This is Part 5 in an ongoing series on disk performance. You can read the entire series by starting at the Introduction.

In part 2 and part 3 of this series I looked at RAID 10 and RAID 5 performance, respectively. Now I’ll show how the two rate against each other. For this comparison I’ll look at all three of my test harnesses for this post (view the test harness specifics here). For all comparisons I am using a 64 KB RAID stripe with a 64 KB partition offset and a 64 KB allocation unit size. As with my previous posts I’ll focus on OLTP data activity (8 KB random reads\writes).

Test Harness #1: Dell PowerEdge 2950

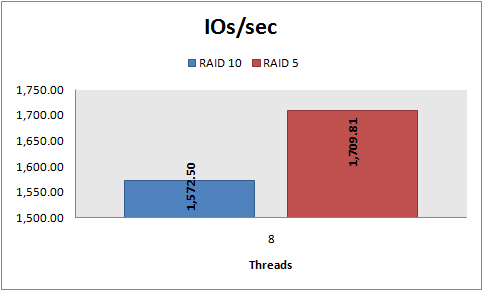

These tests were performed using 4 physical SCSI drives with 8 threads against a 64 GB data file at an I/O queue depth of 8.

Here’s what 8 KB random reads look like:

And here’s what 8 KB random writes look like:

Test Harness #2: Dell PowerVault 220s

Unlike the PowerEdge 2950 which uses local drives, the 220s is a standalone enclosure commonly run “split bus”, a mode which splits the drives in the enclosure into two groups and dedicates an independent SCSI bus to each group. This set of tests was run in split bus mode using 12 physical SCSI drives (i.e. 6 drives per SCSI bus). I tested workloads of 4, 8, and 16 threads against a 512 GB data file; each set of tests used an I/O queue depth of 8.

Here’s what 8 KB random reads look like:

And here’s what 8 KB random writes look like:

Test Harness #3: Dell PowerVault MD1000

The PowerVault MD series is Dell’s current offering in DASD enclosures. The MD1000 is the replacement for the 220s and supports both SAS and SATA drives, and just like the 220s it can operate in split bus mode. Similar to my tests against the 220s, this series was run in split bus mode using 12 physical SAS drives with workloads of 4, 8, and 16 threads against a 512 GB data file using an I/O queue depth of 8.

Here’s what 8 KB random reads look like:

And here’s what 8 KB random writes look like:

Conclusion

Generally speaking, it’s common knowledge that RAID 5 offers better read performance while RAID 10 offers better write performance. The performance graphs from each test illustrate that, but to drive the point home I’ll translate the numbers into percentages. The chart below shows the RAID 10 metrics relative to RAID 5. Red numbers indicate where RAID 10 performed worse; black numbers indicate where RAID 10 performed better. (Higher values for IOs/sec and MBs/sec are better so negative numbers mean a drop in performance. Lower values for latency are better so negative numbers in this column mean a drop in latency which is an increase in performance.)

I knew that RAID 5 has slower write performance than RAID 10, but roughly 65% slower for IOs/sec and MBs/sec? Wow! Arm yourself with this knowledge next time somebody suggests that it isn’t so bad to use RAID 5 instead of RAID 10, especially if your database is write intensive.

RAID 10 Performance From RAID 5?

As I was writing this series my friend Andy Warren asked me an interesting question: What would it take to get RAID 10 performance from a RAID 5 configuration? (Obviously he was talking about write performance since RAID 5 already offers better read performance) Honestly, I had never thought of that angle before, but I can see how it might make sense in some circumstances.

I’ll pretend that I’m being asked to set up a RAID 5 array on a PowerVault MD1000 that gives me performance equivalent to the RAID 10 array I configured on the PowerEdge 2950. For the sake of this exercise I’ll ignore latency and focus on IOs/sec and MBs/sec. Here comes the voodoo math: At 8 threads on a 12 disk RAID 5 configuration on the MD1000 8 KB random writes come out to 71.23 IOs/sec and 0.56 MBs/sec per disk (that’s just the total numbers divided by 12). The RAID 10 results for the 4 drive configuration on the PowerEdge 2950 were 617.12 IOs/sec and 4.82 MBs/sec; to get to those numbers using the voodoo math I just did means I’d need to use 9 disks in a RAID 5 configuration, or over twice the number of disks required for the RAID 10 array to begin with.

So can you get RAID 10 performance out of RAID 5? Sort of. Granted, my math was simplistic at best, but it’s obvious that you can’t just add a few extra disks to a RAID 10 array, reconfigure them as RAID 5, and expect to see equivalent performance; it’s probably going to take 2X the disks and practically speaking the numbers just don’t add up to make it worthwhile.

Stay tuned, in Part 6 I will take a look at how RAID 10 compares against RAID 1.

15 comments

Would these figures indicate that RAID 5 is better than its reputation?

Say if your load is approx 70% read and 30 % write. Wouldn't that give somewhat equal results?

I believe today the word is: if write is more than 5% (10%) use RAID 10.

Recommendation could then be use RAID 5 for data file and RAID 1 or 10 for logs.

Perhaps, but in my experience the decision to go with RAID 5 is based more on storage needs rather than performance. That said, I don't think there's a magic number where you can draw the line. It's really subjective - if write performance is acceptable to the people who use the server than they could care less if it's RAID 5 or RAID 10 under the covers.

I spent several hours this week trying to find an honest and unbiased answer to the Raid 5 vs Raid 10 question -- you answered it well, thank you.

Based on the data you provided to compare between the raid 5 and raid 10. I draw a little chart for the 8k random read/write. This shows with more than 62% of read, raid 5 will be out performed the raid 10.

Thanks for the data. Do you have the performance impact measuring when RAID is missing one drive?

Will RAID5 has fewer impact than RAID10?

This is the best comparison I have seen to date on my quest to figure out which raid to use on my new MD3000. Thank you.

My questions is I plan on setting up a VM server farm for microsoft development systems (visual Studio/Sql Server/IIS). THere is also a minimal use web site and sql server app running that is not of concern today.

Does anyone know what a good recommendation is for a hyvery VM farm running on an MD3000. I can get more drives that is not the issue my lack of knowledge however is and I am looking for reasons to use Raid 10/5 or something else that will give optimal performance when using VM and there corresponding 25-40g virtual hard drives.

RAID 5 has horrible performance when it has lost one drive. It also has horrible performance when rebuilding the array after losing one drive as it has to read every single block of every single drive in the entire array to regenerate the lost disk. If you lose 2 drives in a RAID 5, you are guaranteed to lose all of your data! It is simply unsafe for critical enterprise storage. Just say no to RAID 5!!!

With RAID-10 you may still lose ALL of your data when 2 drives suddenly die at the same time. Is that actually a whole lot safer, having the odds of losing all your data or none of your data in the fairly extreme case that 2 disks die at the same time?

If you use proper hardware (good controllers and good drives), RAID5 is a perfectly fine solution that will benefit you a lot for applications that need many heavy read actions. A good example would be streaming media servers.

>> With RAID-10 you may still lose ALL of your data when 2 drives suddenly die at the same time. Is that actually a whole lot safer, having the odds of losing all your data or none of your data in the fairly extreme case that 2 disks die at the same time?

The only time this is true is if both drives were in the same RAID 1 set in the stripe which is possible but highly unlikely. If you have Disks A/B, C/D, E/F, G/H in RAID 1 and striped across the pairs listed for 1+0, you can lose 1 disk from each of the RAID 1 arrays, up to 4 disks, and the array survives with no data loss, and no performance loss either, that is the benefit of RAID 10. Disk A or B could fail along with Disk C or D, E or F, and G or H, but A and B, or C and D, or E and F, or G and H failing together would result in loss of the array.

And what about the no-RAID configuration, in some specific cases ?

Let's imagine that :

- you focuse only on writing performance, and don't care about redundancy,

- you have 5 SATA drives,

- you must write 20 data streams almost equivalent in throughput, each of them in a different directory.

Wouldn't it be a better solution to not configure a RAID array, but instead just create a partition per disk, and write a given data flow to a given disk/partition : flows 1 to 5 to disk 1, flows 6 to 10 to disk 2, etc...

The scaling of the charts in this article leads to incredibly misleading bars. For example, the first graph, IOs/sec read, shows a RAID 5 column more than twice as high as the RAID 10, yet the difference between the two is less than 9%. In contrast, the same chart for IOs/sec write (4th) shows a RAID 10 bar that is only slightly higher than RAID 5, yet the RAID 10 number is 59% higher! The use of different scales on each of the charts leads to meaningless visual information.

I test my systems with SQLIO, both systems used the same disks and SAN(EVA4400). The RAID5 disks were divided on 42 disks, while the RAID10 disks were divided between 72 disks. The performance of the RAID10 disks was way better that the performance of the RAID5 disks with reads and writes (Random and Sequential).

"The RAID5 disks were divided on 42 disks, while the RAID10 disks were divided between 72 disks. "

The question is: RAID10 42 disks, RAID 5 72 disks. which one is better ?!

thanks a lot for sharing your results. I had a question for you. I have a 4 disk raid 10 on Dell perc 6i. Will adding two more disks increase the performance?

The scaling of the graphs is dangerously misleading. The graphs that show speed improvements in RAID 5 are scaled ridiculously small, where graphs with improvements in RAID 10 are scaled ridiculously large.

On RAID 5 vs 10 performance: A four disk RAID 5 set can only ever use 3 disks for reading and writing. The last disk only stores parity information and is only used in the event of disk failure. Writes take a significant hit due to computation of the parity information. Reads take a hit because all 3 disks are needed for each read. A four disk RAID 10 (not 0+1) set is able to use all 4 disks for read operations, as only one disk from each mirror set is required, allowing two simultaneous reads to occur. In the event of a failure, RAID 5 takes a read hit of up to 70% across the set, whereas RAID 10 only loses one set of drives (in a 4 drive set, 50% reads, 0% writes, in a 6 drive set, 33% reads, 0% writes, and so on) resulting in a much improved and more graceful failure situation.

On Redundancy: RAID 5 can only ever lose 2 drives before a total loss event occurs. Even if 10 drives are used in the set, the loss of any 2 will result in a total loss. This makes RAID 5 sets statistically more likely to result in a total loss as you add more drives. In a RAID 10 set, a total loss event can only occur if all drives from a single mirror set are lost. Otherwise, a RAID 10 set can sustain the loss of all but one drive from each mirror set. In a 4 disk set, configured with two mirrored sets: Min to TL: 2, Max to TL: 3. In a 6 disk set: Min to TL: 3, Max to TL: 5, in a 8 disk set: Min to TL: 4, Max to TL: 7. This makes a total loss event in RAID 10 statistically less likely for each pair of drives added to the RAID set.

RAID 5’s evolution only ever occurred due to the high cost of drives and the low density of drive bays available then in servers. RAID 10 is the superior choice for all situations regarding performance and redundancy, and should be used over RAID 5 whenever possible. The author’s conclusions are misinformed and should not be considered when building a server deployment strategy. For a factual and accurate comparison, find Microsoft’s SQL Server deployment white paper and read the section on disk subsystems. That will clearly show the performance characteristics on the two RAID types. Spoiler Alert: RAID 5 doesn’t win.

One error to correct in the previous anonymous comment. "A four disk RAID 5 set can only ever use 3 disks for reading and writing" is a common misconception. In fact there is one disks's worth of parity data, but it's striped across all the disks in the array along with the data. So in this example all four disks are still spinning simultaneously.

Post a Comment